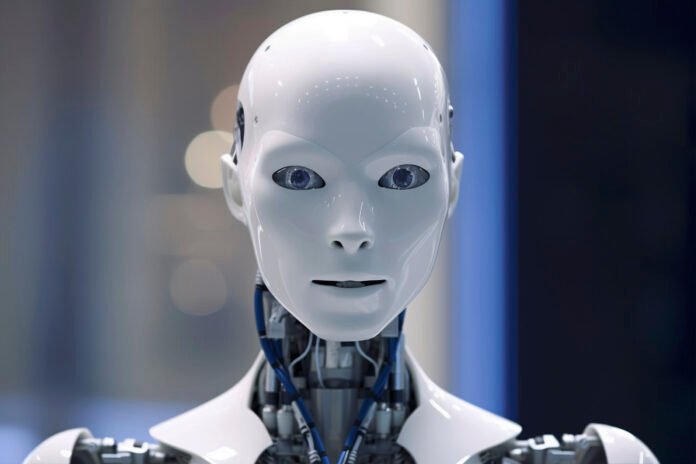

The rapid advancements in artificial intelligence (AI) are powered by breakthroughs in deep learning, enabling machines to learn autonomously during training. This innovation has eliminated the tedious manual process of adding new features, making deep learning a cornerstone of AI-driven technological progress.

Meta, the parent company of Facebook, WhatsApp, and Instagram, has entered an exciting new frontier with the development of tactile AI—a technology that enables robots to sense and interact with their environment in ways akin to human touch. Recently, Meta introduced three groundbreaking tools: Sparsh, Digit 360, and Digit Plexus, designed to bring robots closer to human sensory perception.

Why Develop Tactile AI Tools?

The primary goal of these innovations is to create robots that not only replicate tasks but also actively engage with their surroundings, much like humans interact with the world.

- Sparsh: A general AI model allowing robots to interpret sensory signals and respond in real time.

- Digit 360: A robotic fingertip equipped with sensors that detect delicate sensations, such as the prick of a needle.

- Digit Plexus: A unified framework that integrates tactile sensors across various robotic designs, simplifying the collection and analysis of touch-based data.

These tools are expected to empower robots to perform complex tasks requiring a “human touch,” particularly in sensitive fields like healthcare, where precision and delicacy are vital.

Impact of Tactile AI on Society

The rise of tactile AI raises critical questions: Will this technology foster new levels of collaboration between humans and robots, or will it introduce challenges society may not be prepared to address?

Ali Ahmed, Co-founder and CEO of Robomart, believes:

“As robots gain new sensory capabilities and higher levels of autonomy, we must reconsider their role in society. Meta’s efforts are a significant step towards equipping robots with human-like senses, paving the way for more integrated relationships between humans and machines.”

To advance human-robot interaction, Meta has also developed PARTNR, a standard designed to evaluate collaborative capabilities between humans and robots. By leveraging large language models (LLMs), PARTNR aims to guide these interactions, enabling seamless cooperation in shared environments.

Challenges and Industry Collaborations

Experts recognize the potential, but challenges remain. Ram Palaniappan, CTO at TEKsystems, states:

“Significant effort is needed to scale this research into practical applications in real-world settings.”

Meta is collaborating with GelSight Inc and Wonik Robotics to bring these innovations to market. GelSight will manufacture the Digit 360 sensor, set for release next year, while Wonik Robotics will produce the next-gen Allegro robotic hand, equipped with Digit Plexus for precise, tactile operations.

Are We Ready for Robots That “Feel”?

The evolution of tactile AI invites a pivotal question: Are we prepared for a future where robots “feel”? Some experts argue that tactile AI could revolutionize robots’ understanding of the physical world, enabling deeper collaboration between humans and machines.

Others, like Agustin Huerta, VP of Digital Innovation at Globant, remain skeptical:

“While sensory capabilities can enhance robots’ environmental understanding, replicating human-like senses may not be achievable—or even necessary—in the near future.”

As this technology advances, fostering a healthy relationship between humans and robots will be critical for societal adaptation.